Radiology AI today: fragmented tools, uneven results

The digital health landscape in radiology is saturated. Most tools available to hospitals today are built narrowly — multiple studies of FDA-cleared radiology AI/ML tools show that a large share are intended for specific pathologies or imaging modalities (e.g. pulmonary nodules; tube and line positioning; emergent findings). While this “modular” approach reflects how AI tools are often developed and certified, it creates a difficult operational reality: fragmented pipelines, parallel viewers, conflicting outputs, and increased cognitive load for radiologists.

For hospital executives, this creates tension between strategic ambitions and practical bottlenecks. In a 2023 survey of 100 hospital executives, 62% said the digital health market is “difficult to navigate,” and more than half reported that solutions “often do not work as desired” once implemented. Many radiology departments now find themselves running multiple AI tools simultaneously, each requiring separate integration, validation, and monitoring.

xAID’s proposition: all-in-one multi-pathology AI

Positioned as a counterpoint to this fragmentation, xAID presents itself as a multi-pathology AI “copilot” that analyzes chest/abdomen CTs. It seamlessly detects, segments, and packages results for over 20 acute pathologies in parallel targeting emergency department (ED) workflows where speed and breadth are critical.

Founder shared with us a preprint of a peer-reviewed report “Potential role of AI in the evaluation of unenhanced chest CTs in an emergency setting“, presenting results of a retrospective single-center study, evaluating the sensitivity and specificity of xAID for automated reading of non-contrast chest CT scans. Evaluated pathologies included: lung nodules, lung opacifications, coronary calcification, aortic and pulmonary dilatation, pleural and pericardial effusions, pneumothorax, rib and vertebral fractures, and adrenal masses.

The study included 90 hospitalized patients. The AI software demonstrated a non-inferior pooled sensitivity and specificity compared to reporting radiologists – sensitivity 92,2% vs 58,3%, pooled specificity 95,6% vs 80,6%.

The provider emphasizes usability over diagnostic accuracy — that is, whether radiologists find the system helpful as a support tool, not whether it “beats” human readers. The system is not yet on an FDA AI-Enabled Medical Device List, nor is it CE-marked.

We asked the founder of xAID, Kirill Lopatin, to walk us through the system’s core workflow.

2Digital: Walk me through xAID’s end-to-end flow on an ED case (chest/abdomen/pelvis CT).

Kirill: Concerning DICOM ingestion, a CT study is auto-routed from PACS or modality to xAID through standard DICOM. Next, our AI inference models analyze the full volume, detecting and segmenting multiple findings. During output packaging, results are written in standard formats: DICOM SR (structured report) with measurements, DICOM SEG/RT-STRUCT for overlays, and secondary capture images with highlighted findings. Finally, in the radiologists’ regular PACS viewer, they see overlays directly on the images plus a concise structured report summarizing all pathologies and measurements. Additionally, if integrated with reporting software, the report text can auto-populate into the draft. Everything happens absolutely seamlessly.

This “overlay-first” output design — SEG, SR, and secondary capture — is compliant with standards (DICOM, HL7, FHIR) and avoids proprietary viewers. But it assumes radiologists are comfortable reading multiple findings simultaneously, and that PACS configurations across sites will correctly interpret and display the data without manual adjustments.

Increasing interoperability

Technically, standards compliance does not mean instant interoperability, and local IT adaptation is often required. Hospitals vary widely in PACS architecture, firewall rules, and reporting systems. Different vendors implement DICOM SR or SEG differently, leading to inconsistent overlays or incomplete metadata.

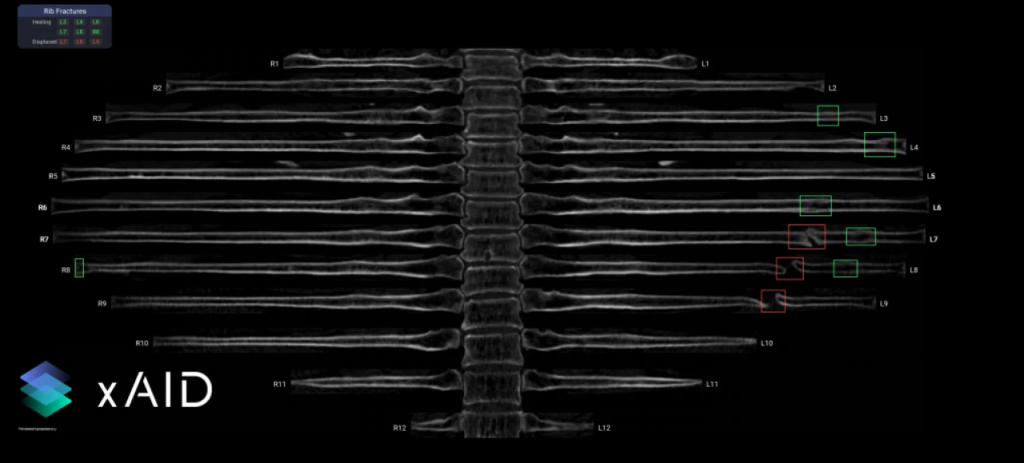

Picture: xAID co-pilot showing rib fractures. Green boxes – healed fractures, red boxes – displaced fractures

Marketplaces such as Nuance Precision Imaging Network (PIN), CARPL.ai, and Blackford attempt to centralize this customization, making tools closer to “plug-and-play.”

xAID positions itself as “viewer-agnostic” and compliant with open standards. That means hospitals don’t need custom integration work, which is a key differentiator for CIOs managing dozens of legacy systems.

2Digital: Can your AI plug-and-play with existing PACS/EHR using open standards (no custom work)?

Kirill: Yes. We use standard DICOM, HL7, and FHIR interfaces. That means no custom code or vendor lock-in. Hospitals can route studies to us the same way they would route to any modality, and results flow back into their existing PACS, RIS, or reporting system. We’re “viewer-agnostic” by design.

QA mode and model drift

Perhaps the most quietly consequential part of any AI deployment is post-deployment performance monitoring — something many vendors underdeliver. xAID addresses this via “silent QA mode,” allowing hospitals to compare AI outputs to radiologist reports behind the scenes.

Picture: xAID co-pilot, showing segmentation and measurement of organs in the mediastinum and pleural cavity

2Digital: How do you handle post-deployment performance monitoring and model drift?

Kirill: We continuously track key performance indicators: detection rates, false positives, case mix, and turnaround times through our feedback platform. Where hospitals agree, we enable silent QA mode — AI runs in the background and results are compared with radiologist reports. This creates real-world performance dashboards and early alerts if drift occurs (e.g., scanner protocol changes, new population mix). Updates are delivered through our regulatory-compliant release pipeline, so hospitals always stay on a validated model version.

From a governance perspective, this offers a form of internal surveillance, allowing early detection of accuracy drop-off without relying on vendor self-reporting.

Marketplace alignment vs system dependency

With the rise of distribution marketplaces like Nuance PIN, CARPL.ai, and Blackford Analysis, many hospitals now prefer to source AI tools via trusted platforms rather than direct vendor agreements. xAID appears to align with this shift.

2Digital: Hospitals increasingly buy via platforms (Nuance PIN/Microsoft, etc.). How do you compete?

Kirill: We integrate with them rather than fight them. xAID is already partnering with CARPL.ai, and other marketplaces. Our strategy is “open distribution”: hospitals can buy directly from us or via the ecosystem they already trust. The differentiation is in our breadth of coverage (20+ pathologies in one model), our results-based pricing model (we charge only if we produce findings), and the fact we provide comprehensive ED coverage rather than fragmented single-disease AI. Platforms become amplifiers, not competitors.

The model of “pay-per-finding” is rare and potentially attractive. These agreements should be approached with the same rigor as reimbursement contracts — clear definitions, caps, and auditing clauses – e.g., should a small incidental lesion trigger billing? Vendors, clinicians, and payers may differ on what counts as a billable event.

xAID constitutes a case study of how AI in radiology is evolving: from narrow classifiers to integrated systems; from cloud silos to embedded overlays; from fixed price tags to dynamic outcome-based models.