Today, AI is simultaneously a religion and an irritant: some hope that humanity has gotten its hands on a tool for explosive development; for others, the very disclaimer “made by artificial intelligence” already causes irritation. People worry that LLM will take jobs away from millions and, in the longer run, will become a superintelligence that will enslave humanity. And more and more often we hear that multibillion-dollar infusions into the industry are inflating a bubble that will soon burst.

Siarhei Besarab, a research chemist and visiting researcher at the Global Catastrophic Risk Institute (GCRI), a technooptimist and AI skeptic, offers a cold shower both for those who are panicking while awaiting the apocalypse and for those who believe that soon AI will do everything for us. In his assessment, today’s LLMs can help with routine tasks, but are almost useless where the formulation of questions, causal explanations, and real science are needed rather than a presentation for investors.

From monkey work to real science: where LLMs help and where they don’t

2Digital: As a scientist, how do you assess the help that AI gives to science?

Siarhei: Today, neurogen is, so to speak, at the level of a student writing a diploma thesis — someone who doesn’t really understand anything in particular, but works very fast, diligently, and can be used as “an extra pair of hands.” That’s why artificial intelligence takes on all the senseless routine that used to devour a lot of scientists’ time. First and foremost — processing databases: trying out different hypotheses, automating analysis, writing basic code, and so on.

We are talking only about “monkey work,” the kind that scientists don’t like doing because there isn’t a drop of creativity in it. When people tell you about grand scientific breakthroughs made by AI, it is nothing more than PR.

2Digital: Then let’s define where AI today has really performed well, and where its usefulness is overstated.

Siarhei: Its usefulness is overstated in creating fundamental theories, causal explanations, and in formulating scientific questions. I start laughing when I hear that AI has proved Fermat’s theorem. Neurogens, or so-called artificial intelligence, are currently only capable of parasitizing on already existing structures of knowledge, but are not capable of creating anything new.

LLMs have performed very poorly in education, although at first the story about personalized AI teachers was very widely hyped. But given the consistently high percentage of hallucinations in AI, it became clear that using it to teach people some fundamental basics is ineffective, because it builds a significant and unacceptable percentage of “defects” into the transmitted knowledge.

The junior bottleneck: how automation can hollow out expertise

2Digital: Suppose AI in some areas is now at the level of a student. And that comes with a problem. All of us were once young specialists and went through the stage of “monkey work” that AI is doing now, mastering the basics of our profession. Now one can often hear that companies are cutting juniors, because they are easy to replace with LLMs. What does this threaten?

Siarhei: It threatens with collapse. To explain, I’ll start from afar. Intelligence is a process. Skills are the result of that process. That is, a skill, in itself, is not intelligence. Demonstrating certain skills when performing any task is not intelligence, and does not even testify to it. Intelligence is the ability to cope with new situations and the ability to lay new paths.

From this point of view, most young specialists do not have intelligence in their field of knowledge as such. They acquire it by working for some time in a living environment, whether it is a factory, a research institute, a clinic, or a creative workshop. A specialist’s intelligence is nurtured by a process that we can describe as creativity.

And now more and more short-sighted managers are reducing the number of juniors, cutting off the basic rung of the ladder that forms professional intelligence in their employees. Sooner or later, mids and seniors will run out… and then what?

2Digital: Playing “devil’s advocate,” one could object: the speed of AI development suggests that in two or three years it will grow up to the level of mids, and they, too, can be replaced. And a little later — to an even higher rung…

Siarhei: That won’t work. Roughly speaking, juniors used to learn on some routine tasks, and now that routine has been taken over by the neurogen. Accordingly, the question here is: those mids and seniors who currently validate the neurogen’s code, where will they come from later?

Have you tried writing code with AI? The neurogen creates it for you quickly, in blocks, often non-working. If you don’t understand where and what it changed, you just send it back to rewrite it again. But the code falls apart even more. For example, I don’t know the ADA language, and I needed to fix something during compilation of the Coreboot BIOS firmware. After AI’s edits the code didn’t work, and I tried making fixes with the neurogen again and again — made clarifications, corrected, unsuccessfully tried to run it. It turned into an expanding spiral of erroneous code. In the end, another LLM looked at the result of our joint “work” and said that everything had to be created from scratch. In the end, I lost a day. Imagine that within a large company a specialist blindly spent two or three days untangling code that was non-functional from the very beginning?

For a human, that is also a skill. A hypothetical junior who lost two or three days to this madness a couple of times will understand that it would have been faster to finish learning and write the code themselves. An LLM, though, can generate nonsense nonstop, around the clock…

Accordingly, by excluding juniors from the process — the ones who learned to work with “stupid” code — we end up with operators who don’t understand how their machines work. Everything literally stops at the very first breakdown.

2Digital: When we talk about the existential danger of AI, most often we mean a scenario like in Terminator, when the world is taken over by a super-mind that decided humans are an obstacle. How do you see this?

Siarhei: The thing to fear is not what is described in Terminator, but what is described in Idiocracy. That is, in my view, the most obvious threat. I’m talking about total cognitive dysfunction in a significant part of humanity. The brain is like a muscle: if you don’t use it, it atrophies (the well-known rule “use it or lose it”). Accordingly, by actively using neural networks, you delegate part of your cognitive functions to them. And many direct the resources that are freed up not toward learning and creativity, as the techno-optimists of the past dreamed, but toward watching TV series and doomscrolling a TikTok feed. In effect, by shifting onto neural networks the cognitive tasks that keep our brain in shape, we form what is called “cognitive debt.”

There was an article, where they described this phenomenon well. Every time you hand something over to the neurogen, your cognitive debt grows, and in a personal long-term perspective this is very bad. We can consider mindless use of neural networks an activator of accelerated cognitive aging. Your body is, suppose, 40 years old, and your brain is 70.

When I hear about a superhuman intelligence that will take over humanity, I ask: even if some hypothetical general artificial intelligence appears, what makes you think it will care about us at all? Do you, personally, today worry much about the fate of dust mites that live by the billions in your apartment? There is a probability that such an imagined non-human AI simply won’t notice us. But here there is a dangerous fork: it might simply not notice and move on, or it might accidentally crush us without noticing. But right now we are reasoning about some “godlike” AI, the creation of which is still very far away.

The Canadian researcher Richard Sutton, whose ideas are close to me, notes that as long as in the very concept of the LLMs being created there is traceable anthropomorphism — imitation of the human and the human-like, including human methods of processing information — we won’t see any outstanding breakthroughs in AI development. Roughly speaking, we can keep our cool as long as AI continues to think in a human way, to imitate humans. Because it’s impossible to get something new by constantly increasing the volumes of copying the old. He is straightforward about that: “building in how we think we think does not work in the long run.”

Sutton considers the era of transformers and chatbots a dead-end-branch on the path to superintelligence, mainly because all these models are not capable of learning from their own experience, from their own mistakes. What many today perceive as “the neurogen’s memory” is in fact just a hard-coded wrapper. In the chatbot itself, chats are not remembered and cannot be remembered; if some function is needed, it is called externally.

It is precisely the scaling of compute, and not attempts to imitate human thinking, that can, in theory, put neurogens on the path to superintelligence. All recent breakthroughs in so-called AI happened not because these systems became smarter. They got the ability to use more computational resources and fewer human assumptions.

In this sense, the story with AlphaGo is quite illustrative: it passively learned on the entire corpus of Go games played by masters. But then the new model stopped using human experience and played several hundred million games against itself. The result: in 2017, the neurogen definitively defeated the human in this incredible game. A game, by the way, far more complex than chess and requiring spatial thinking.

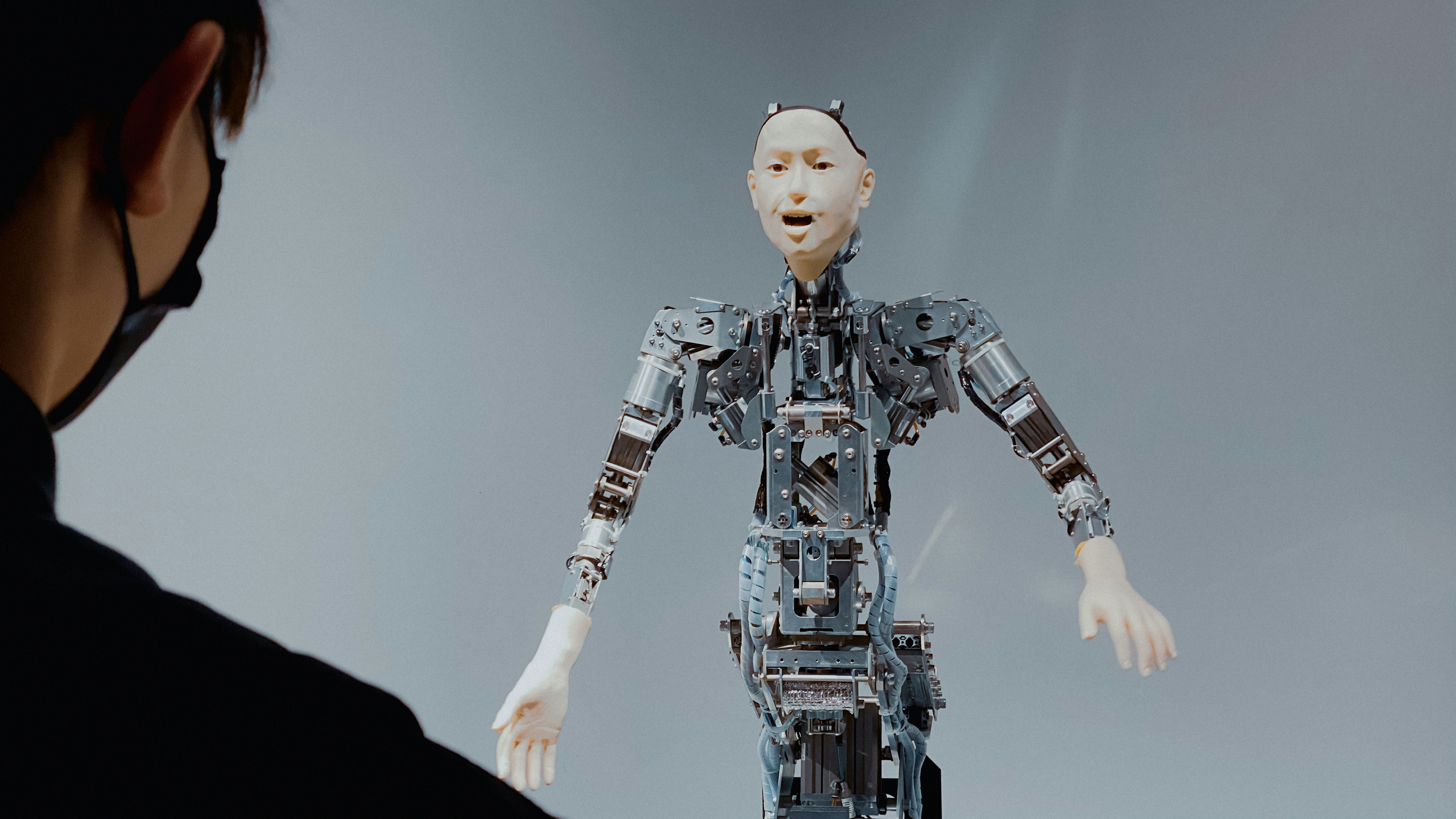

I.e., evolution is not about a stochastic parrot, a digital fortune-telling gypsy that sits in all modern chatbots and predicts the next token in the text stream. It’s about models directly contacting the external environment and receiving a feedback from it, constantly training on it. I see such an option only with the ubiquitous introduction of robots into people’s everyday life.

At the same time, it is important to note that today we poorly understand what kind of experience AI will get when it begins to learn not only on text corpora, but by comprehensively analyzing sounds, images, physical interaction with objects. This will become possible with the active adoption of autonomous anthropomorphic robots.

2Digital: Such robots already exist today…

Siarhei: Yes, but these are mostly experimental prototypes. Recently, the IEEE Communications Society published a study, saying that anthropomorphic robots, essentially, are not needed by anyone. Because the quality of their work is significantly lower than that of humans.

There was a lot of hype around supplying such robots to manufacturing. But it turned out that manufacturers still don’t understand how to apply them in a meaningful way. At the same time, for robot suppliers it is very appealing to service single, serial orders, rather than cover the multiple needs of retail.

And there is an assumption that, whatever progress there is in the field of neural networks, it will not proportionally increase sales volumes of anthropomorphic robots.

2Digital: And what do you think about this version: a social catastrophe awaits us — people deprived of work, which AI took from them, will rebel…

Siarhei: As a fan of Frank Herbert’s books, I want to remind you about the Butlerian Jihad in his Dune — a holy war that people waged against “thinking machines.” I’m saying this because up to a certain point humanity really retains the possibility to go out into the street and solve all problems with AI through a revolt. There is always the possibility of a turn toward neo-Luddism (Luddism — a movement of English textile workers in the early 19th century who protested the worsening of working conditions and cuts in pay by destroying new machines — ed.).

Besides that, it is worth remembering that in companies that create and promote AI there are whole departments engaged in working with public opinion, and I am sure they calculate how to minimize the probability that people will go out into the streets.

AdGI vs AGI: the business model that can save or kill the bubble

2Digital: Everyone talks about an AI bubble. Do you see it?

Siarhei: It brings to mind the dot-com bubble of the 1990s. Companies are already spending hundreds of billions of dollars on chips and data centers. And what is the real revenue? So far, profits are completely disproportionate, to the costs. And there was an excellent Goldman Sachs report, arguing that LLMs in their current form will never reach self-sufficiency. I’ll agree right away that this sounds very categorical. But common sense also tells me that such a cost imbalance will be very hard to compensate for.

And for quite a long time I’ve had one very jarring thought: the only way to level this out is through advertising inside LLMs. And I see that these thoughts come up not only for me. Recently someone had a post saying that in the near future what’s coming is more likely AdGI (Advertising General Intelligence) than AGI (Artificial General Intelligence). Moreover, AGI is unlikely within at least the next three to five years, while AdGI could arrive tomorrow. Because that thing neatly closes the gap between hardware costs and the economic profit being generated.

So there are three paths.

The first — the bubble bursts.

The second — something like AdGI appears.

The third — AI makes a real breakthrough, becoming truly useful infrastructure, similar to what happened with the Internet in its time.

If everything stays “as is,” it will take just a couple of years for the large corporations investing in AI development to start asking the “founding fathers”: guys, where is the profit?

Because essentially, the main fuel of growth for the AI market right now is hype. In principle, that can also count as a sign of a bubble: marketing running ahead of current capabilities. Plus, re-serving old methods under an AI-powered label.

Hype is the fuel for investment. Altman’s public presentations look like Apple presentations, or Starship launches. But behind the bold statements, if you listen closely, there haven’t been any global breakthroughs in recent years. How long will investors tolerate listening to claims that, thanks to AI, within the next twenty years we will be able to explore the Universe, and thanks to neural networks we will find a cure for cancer — without real proofs?

Here, for example, we are told that, thanks to AI, hundreds of new materials have been discovered — and on closer inspection they turned out not to be new (but old ones with, for instance, elements swapped around — so-called “structurally naive compounds”), or they contained some wild elements like promethium or protactinium, which pushes their production beyond the bounds of common sense (the so-called synthesizability problem).

Recently there was a big news that a neurogen created a fully-fledged Linux computer from 843 components and it booted on the first try. But then you start looking and it turns out the neurogen simply designed two printed circuit boards. And on the basis of a ready-made schematic. And without taking into account what these boards were for. If you remove the hype word AI from the story, you get that the same thing was being done back in the 1970s by ordinary routing programs.

2Digital: Can AI become an instrument of terror in the form in which it exists now?

Siarhei: It has in fact already become one. Society now lives in two realities: the physical one – our familiar world, and the informational one – the one hidden behind the screen of a smartphone and a computer. And neural networks have become an instrument of terror through this personalized disinformation and social engineering.

We see hundreds of mentions of a person’s voice being generated, and even their video image, in order to trick money out of them. Entire waves of fakes and disinformation are created ahead of elections in different countries. Waves of scam are generated.

Terror is already here; it’s just that we are used to being frightened by threats from the physical world and don’t always give credence to the war of narratives unfolding in the information space. At the same time, informational terror leads to the same real physical victims, if not more. And psychological recovery from info-terror may turn out to be significantly harder than from some physical impact.

Worst of all, neural networks are now being used as an incredible propaganda machine the likes of which has never existed in the history of humanity.

2Digital: Do you see that the creators of artificial intelligence are genuinely concerned that new capabilities may fall into bad hands, and that they are making efforts to prevent this?

Siarhei: I think that so far the only thing that concerns them is what is written in regulatory acts. They don’t give a damn about the fate of humanity.

I want to remind you of the Asilomar conference that took place in 2017. At it, 23 principles of responsible AI development were formulated: safety, transparency, responsibility, human control, taking social consequences into account. An ever larger portion of these points is being ignored by developers of modern LLMs. What’s more, some “founding fathers” are beginning to state in plain text that we should remove absolutely all restrictions from artificial intelligence.

Indirectly, their intentions are confirmed by the fact that in recent years they have been cutting precisely the safety departments in their companies. For example, in 2024, after the departure of Ilya Sutskever and Jan Leike, OpenAI demonstratively disbanded the “Superalignment” working group, which dealt with questions of the ethics and safety of neural networks. Safety departments have been eliminated in other major companies as well.

For example, in March 2023 Microsoft completely eliminated the AI Ethics & Society team, which worked to ensure that the principles of “responsible AI” were actually taken into account in product design.

Financial Times writes, that Microsoft, Meta, Google, Amazon, and Twitter/X had their “responsible AI” teams cut, which raises questions about safety priorities against the backdrop of an accelerating AI race.

In 2024 Google carried out a reorganization of the key responsible-AI team Responsible Innovation (RESIN): its leaders left, and the team was effectively split.

2Digital: One could object: these are problems that should be monitored and fixed by regulatory systems of different countries. But now we see a situation in which most problems that arise in relation to AI ethics are ticked off with a checkbox in the user agreement. When, in your view, will the European Union and the U.S. understand that it’s time to treat these issues seriously?

Siarhei: As some serious catastrophes occur. As soon as the problem reaches a mass level.

Do you remember we talked about the two worlds we live in: the physical and the informational? Well, we have all learned the rules of the first world, and the consequences for violating them often show up immediately. If you cross the road at a red light, a car hits you; if you steal in a store, you go to prison.

In the informational world there is a delayed effect: the consequences of your actions may not show up right away and can have a cumulative effect, imperceptibly merging with the actions of other people.

We live halfway in the informational world, in an informational ecosystem. But any rules of behavior in it, the conditions of life in this informational society — they are not studied at all. For what reasons?

One can slip into conspiracy theories and suggest that there is a part of society for whom the current state of affairs is beneficial. But I am more inclined to “Heinlein’s Razor,” which, by analogy with “Occam’s Razor,” says that you shouldn’t attribute to a conspiracy theory what can be explained by ordinary stupidity.

2Digital: How do you assess the existential threat from AI that will surpass human intelligence by its parameters?

Siarhei: Yann LeCun, a professor at NYU, one of the creators of convolutional neural networks (LeNet): “Before we reach Human-Level AI (HLAI), we will have to reach Cat-Level & Dog-Level AI.”

As I said above, anthropomorphic AI is unlikely to take off. What’s more, the current architectures of large language models are autoregressive. In the AI market, the key word belongs to programmers, not philosophers, cognitive scientists who are really immersed in personality theory. What can a programmer, limited by the window of their SDK, know about the functioning of real intelligence?

Consider it an axiom: there will be no transformer/chatbot/etc. that “surpassed human intelligence,” because there is no agency, no autonomous goals, no stable ability for self-improvement outside an environment. The only thing to fear is the moment when something mentioned by Sutton begins to appear.

2Digital: We asked AI to pose three more critical questions to you about your work. Here is what it suggested. First question — What observable facts or results would make you revise your assessments of AI risks (toward “more” or “less”)? Name specific triggers, not general words.

Siarhei: First, when it starts giving me real, at least half-valid references. Second, when it stops using manipulation. Notice that it is currently impossible to get a dry, academic answer from AI — it quickly slides into “pouring water.” Third, when AI learns to directly tell me no when it doesn’t know the answer to a question.

A probabilistic model is a probabilistic model, a stochastic parrot that has grown on human texts. If something was mentioned somewhere, then the more often it was mentioned, the lower the probability of hallucination. If you ask about something rare and utilitarian, a hallucination will happen with almost 100% probability. And I want the answer: “I don’t know the answer.”

2Digital: Second question from AI — if tomorrow you were forbidden to use AI in your work, what would you lose first of all?

Siarhei: I would lose the speed of work. At the same time, if I were offered to go back now, for example, to Google Search version 2019, I wouldn’t be very upset — for me it was a sufficiently effective working tool. With the appearance of AI, Google Search was deliberately made worse. There was a moment when there were many posts, with people’s observations that Google Search suddenly began working significantly worse.

At the same time, I really like how neural networks work as a secretary, transcribing audio into text, translating information into different languages. This really looks very cool; it would be a shame to lose that achievement.

2Digital: Third question from AI — where have you yourself, as a researcher and public expert, already been wrong in your forecasts about technologies and risks? What did that mistake teach you? And how do you now protect yourself from self-deception?

Siarhei: Without false modesty I’ll say that I made forecasts rarely, and if I did, they generally came true. However, at times — with a delay. I always believe it is better to overestimate a threat than to underestimate it. I believe that healthy pessimism is a good quality, as is reasonable alertness.

In today’s chaotic, unpredictable, fiercely roaring twenties, the one who is ready for anything is the one who expects nothing good.