More than 40 million people worldwide turn to ChatGPT every single day with healthcare questions, according to a report released by OpenAI today, underscoring a dramatic change in how Americans are seeking medical information in response to a healthcare system they perceive as fundamentally broken.

Health-related messages now comprising more than 5 percent of all platform conversations globally. Nearly 2 million of these weekly messages focus specifically on health insurance navigation — a telling indicator of American healthcare’s administrative complexity.

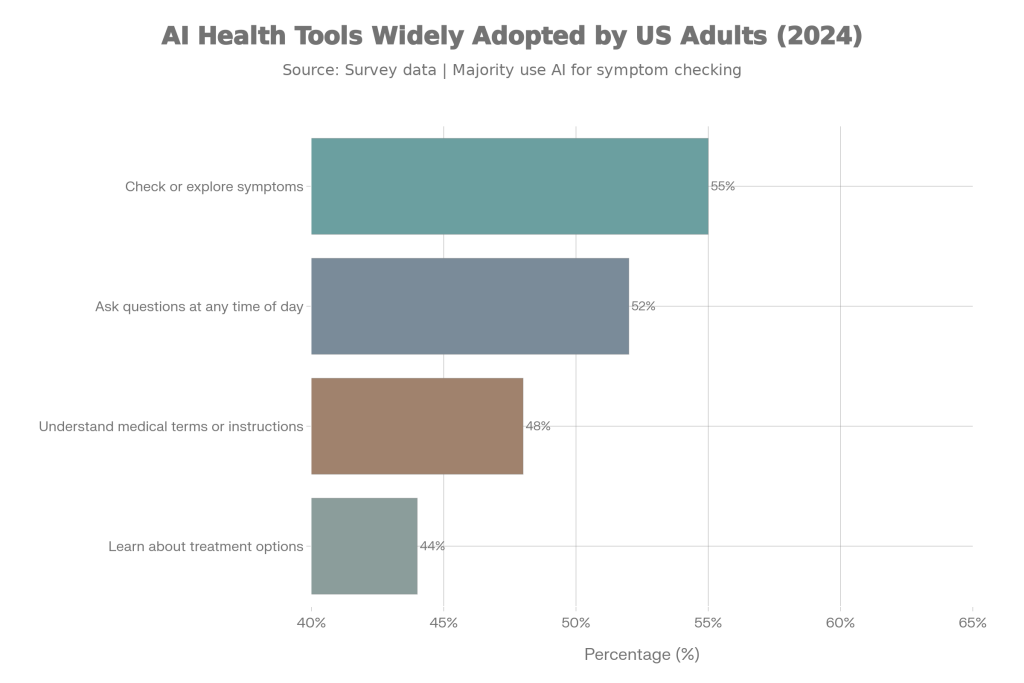

Among Adults (US), who use AI to help manage health-related questions.

Adapted from: AI as a Healthcare Ally. How Americans are navigating the system with ChatGPT

OpenAI’s report presents this trend as a validation of ChatGPT as a healthcare ally, noting that patients use the platform to understand symptoms, decode medical jargon, prepare for clinical visits, and navigate insurance bureaucracies.

This explosion in AI-driven healthcare guidance exists in a profound regulatory and legal gray zone, where neither ChatGPT users nor the company itself have clear accountability frameworks when the advice proves dangerously inaccurate.

Mounting evidence suggests that large language models pose significant risks in healthcare contexts. A meta-analysis published in World Psychiatry comparing various chatbot models found a “significant shortfall in evidence regarding their medical or near-medical usefulness for people in crisis”.

OpenAI’s Service Terms explicitly state that “Our Services are not intended for use in the diagnosis or treatment of any health condition. You are responsible for complying with applicable laws for any use of our Services in a medical or healthcare context.”

Legal scholars and regulators increasingly question whether such disclaimers can shield companies from product liability claims.

Legal experts note that while online platforms like Google and X receive broad protection under Section 230 of the Communications Decency Act, this protection likely does not extend to OpenAI, which actively crafts individualized responses rather than passively transmitting information.

Several states have enacted laws specifically addressing AI-generated health advice: Utah prohibits mental health chatbots from misrepresenting themselves as providing professional care, while Nevada bans AI systems from practicing mental and behavioral health services outright.

Notably, OpenAI’s healthcare report advocates for clearer regulatory pathways rather than resisting oversight. The company explicitly urges the FDA to “move forward and work with industry towards a clear and workable regulatory policy that will facilitate innovation of safe and effective AI medical devices.”

For now, ChatGPT has become an ally born of necessity. The geographical dimension of this trend is particularly pronounced in America’s most underserved regions. In areas defined as “hospital deserts” — locations more than a 30-minute drive from a general medical or pediatric facility — ChatGPT averaged more than 580,000 healthcare-related messages per week during a four-week period in late 2025. Across the country nearly half of all rural hospitals operate with negative margins, and more than 400 hospitals across 38 states are considered vulnerable to closure.